2020-10 upd: we reached the first fundraising goal and rented a server in Hetzner for development! Thank you for donating !

Attention! I apologize for the automatic translation of this text. You can improve it by sending me a more correct version of the text or fix html pages via GITHUB repository.

Use of CBSD together with DFS (Distributed File System)

General information

One of the distinguishing features of CBSD from other modern wrappers for managing jail and bhyve on the FreeBSD platform is the lack of a rigid binding to the ZFS file system, which leads to a certain overhead in terms of code when you use only ZFS, but makes CBSD a more versatile tool that you can use in more general situations.

One such situation is the use of various embedded platforms with very few resources where the ZFS file system is redundant and voracious, which makes it ineffective on various Raspberry PI and similar solutions. On the opposite side of the minimalism are large and large-scale hyperconvergent installations using NAS/SAN and distributed storage systems, using external storage that is connected via NFS/iSCSI protocol or distributed storage systems like ClusterFS and Ceph.

This will highlight the use of CBSD in these installations and describe the Howto-style application notes.

The general requirement for using CBSD on DFS, which is typical for any implementations, is turning off the zfsfeat option and hammerfeat option in 'cbsd initenv-tui' and the need to bring the following directories to the shared store:

- ~cbsd/jails-data: directory with container or virtual machine data

- ~cbsd/jails-system: system directory with additional system information related to the container or virtual machine

- ~cbsd/jails-rcconf: the directory is used when the environment switches to unregister mode

- ~cbsd/jails-fstab: the directory for fstab files

If the working directory (workdir) is initialized in /usr/jails this is, respectively, the directories:

/usr/jails/jails-data /usr/jails/jails-system /usr/jails/jails-rcconf /usr/jails/jails-fstab

You can transfer the indicated locations to DFS and mount all the environments at one place, however, the authors recommend dividing the resources into individual environments. For example, for container jail1, export and mount directly jail1 data, separate from others:

/usr/jails/jails-data/jail1-data /usr/jails/jails-system/jail1 /usr/jails/jails-fstab/jail1

This will allow you to optimize the operation of file systems between environments and reduce the potential impact of one environment on another - for example, if jail1 very aggressively uses file system locks, it will also affect jail2 when mounting /usr/jails/jails-data, whereas different sessions and mounts for /usr/jails/jails-data/jail1-data and /usr/jails/jails-data/jail2-data will be independent. In addition, in this case, you can use different mount sources - jail1 resources can be located on one DFS server, and jail2 on another. For this, CBSD has a mechanism for mounting and dismounting, as described below.

Other directories, such as bases, you can also put on a shared volume to save space. However, it is much more efficient to store the base container files locally, which with the baserw=0 parameter guarantees the operation of the basic utilities and libraries with the speed of the local disk and the absence of possible network problems.Shared storage provides an easy way to migrate a zero-copy environment. So, you can move the container to the unregister state on one node:

node1:

% cbsd junregister jname='*'

and having registered, without any copying, start using it on another:

node2:

% cbsd jregister jname='*'

Known issues.

Some DFS, such as NFSv3 and GlusterFS, require additional configuration in pkg.conf for correct locking:

% echo "NFS_WITH_PROPER_LOCKING = true;" >> /usr/local/etc/pkg.conf

CBSD hooks for DFS

If you go beyond the case when all the environment data is located in the local ZFS/UFS file system, you will come across the issue of pre-mounting a resource containing the environment data. To do this, the CBSD uses the mnt_start and mnt_stop parameters, in which you specify user scripts that work at startup and when the environment stops. It can be any executable script, for successful work with CBSD of which it is necessary to follow several rules:

- Your script must be resilient to secondary mounting. For example, you started the environment, then stopped and started again. Your script should not be mounted on top of the previous mount - this check and this behavior is on the conscience of your script - check the fact of mounting;

- Your script should exit with code 0 on success or non-zero code on fatal problems;

- Your hook's run time is unlimited - avoid situations where your script may hang forever;

- CBSD runs a script with certain arguments to convey some useful information. Your script must process the parameters via getopt in order to accept them. These are the following keys:

-d data - path to the environment data directory; -f fstab - path to the fstab directory (relevant mainly for jail); -j jname - directly the name of your environment; -r rcconf - rcconf directory path; -s sysdata - sysdata directory path;

As you can see, using these parameters with the ability to create custom scripts for connecting external storage gives you the opportunity to use scripts that no white people have heard of, for example: iSCSI, Infiniband, GELI, HASTD, etc.

As a working example, look at the script for working with NFSv4 in the next paragraph.

In addition, it may be useful for you to find out about the possibility of custom cloning operations - for example, if you manage your external storage and it can perform data copying operations on storage side, you can avoid high network load during normal data copying, and make an instant clone with using an external drive. See here and here for details.

CBSD with NFSv4

Using CBSD and jail with NFSv4

We have a separate NFS server based on FreeBSD and we have a separate server with CBSD that will store jail on an NFS server.

IPv4 of NFS server: 172.16.0.1, IPv4 of CBSD server: 172.16.0.100

The jail data on the NFS server will be located in the /nfs directory

Let's configure NFSv4 server:

In /etc/exports write path for exports:

/nfs -alldirs -maproot=root 172.16.0.100 V4: / 172.16.0.100

If the NFS server uses ZFS, be sure to enable the sharenfs option on the required pool. For example, if the ZFS root system is zroot/ROOT/default, then NFS export is enabled through the following command:

% zfs set sharenfs=on zroot/ROOT/default

In /etc/rc.conf add the services necessary to start the NFS server by running the following commands:

sysrc -q nfsv4_server_enable="YES" sysrc -q nfscbd_enable="YES" sysrc -q nfsuserd_enable="YES" sysrc -q mountd_enable="YES" sysrc -q nfsuserd_enable="YES" sysrc -q rpc_lockd_enable="YES"

Create empty directories for jail1, which will be on NFS:

mkdir -p /nfs/data/jail1 /nfs/fstab/jail1 /nfs/rcconf/jail1 /nfs/system/jail1

After rebooting the NFS server or services, the NFSv4 server is ready for operation.

From the CBSD side, we need to mount the container data through the following commands:

% mount_nfs -o vers=4 172.16.0.1:/nfs/data/jail1 /usr/jails/jails-data/jail1-data % mount_nfs -o vers=4 172.16.0.1:/nfs/fstab/jail1 /usr/jails/jails-fstab/jail1 % mount_nfs -o vers=4 172.16.0.1:/nfs/rcconf/jail1 /usr/jails/jails-rcconf/jail1 % mount_nfs -o vers=4 172.16.0.1:/nfs/system/jail1 /usr/jails/jails-system/jail1

You can use the /etc/fstab file with the 'late' option to mount directories when the node starts, or use automount ( autofs(5) )

However, in this example, we will demonstrate the operation of the mnt_start option with an external custom script for mounting file systems. The script is present as a working example and lies in the directory /usr/local/cbsd/share/examples/env_start/nfsv4. It is pretty simple. It also uses a configuration file in which you can edit the dynamic settings for your environment.

Copy the configuration file and fix the IP addresses:

% cp -a /usr/local/cbsd/share/examples/env_start/nfsv4/env_start_nfs.conf ~cbsd/etc/ % vi ~cbsd/etc/env_start_nfs.conf

In our example, the file will look as follows:

NFS_SERVER="172.16.0.1" NFS_SERVER_ROOT_DIR="/nfs" MOUNT_RCCONF=0 MOUNT_FSTAB=1 MOUNT_NFS_OPT="-orw -overs=4 -ointr -orsize=32768 -owsize=32768 -oacregmax=3 -oacdirmin=3 -oacdirmax=3 -ohard -oproto=tcp -otimeout=300"

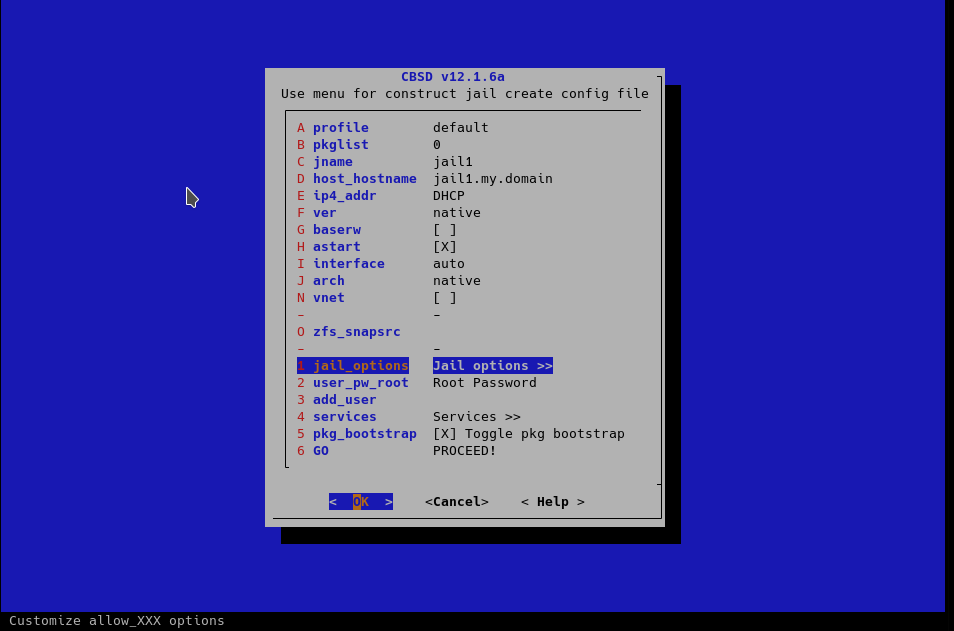

Create jail via cbsd jconstruct-tui:

Do not forget that we create a container with the name jail1, specify it as jname.

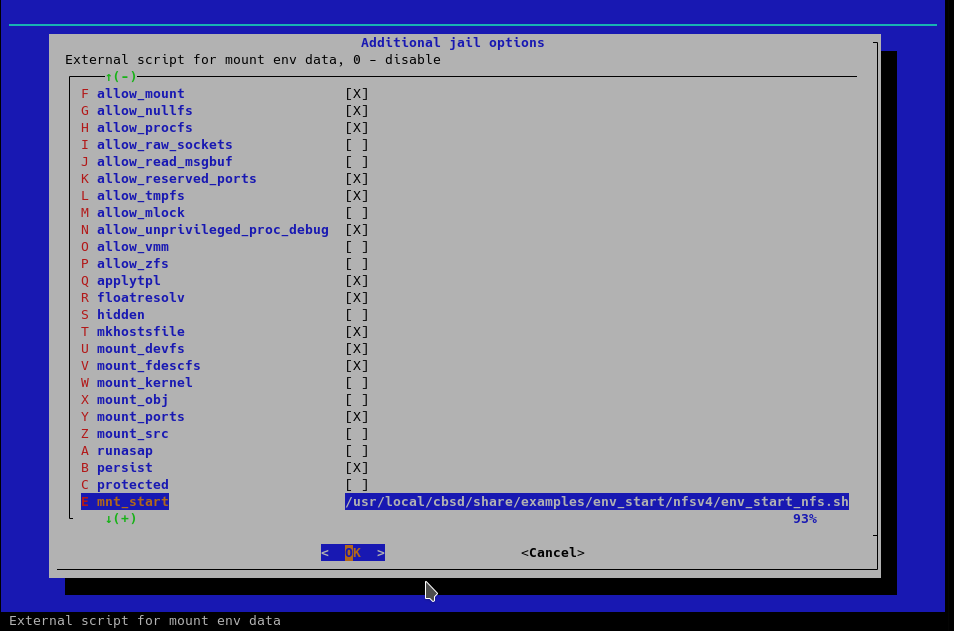

Since we will use the script for the mnt_start parameter without modification, we will specify it directly in /usr/local/cbsd/share/examples/env_start/nfsv4/env_start_nfs.sh:

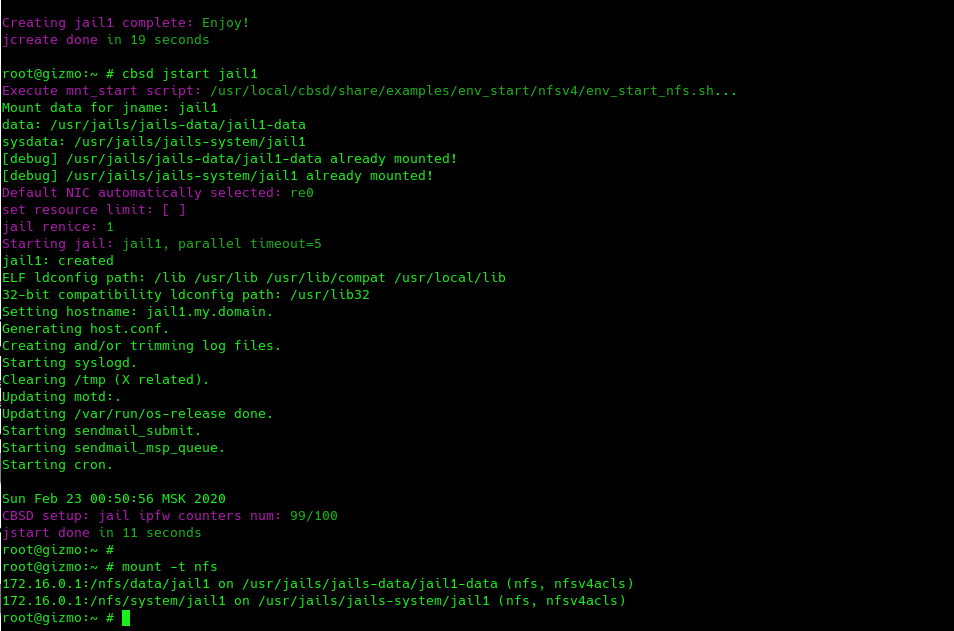

Now, for operations that require container data (for example jstart, jcreate), CBSD will call this script.

If you use several CBSD servers, you can easily re-register the container from one physical server to another through the junregister command on one server and jregister on another:

node1 % cbsd junregister jname='jail*' // on the source node where the jail is registered node2 % cbsd jregister jname='jail*' // on another/destrination node

And migrate your environments by distributing and scaling the load on your cluster more smoothly.

CBSD and GlusterFS

WIP. Short howto available here: CBSD with GlusterFS

CBSD and CEPH

WIP/